TERMS OF REFERENCE (TOR) FOR END TERM EVALUATION OF SELF-PACED CHPS ELEARNING STUDY:

Living Goods

Temporary

On-site

Busia

An endline evaluation to assess the efficiency & effectiveness of the eLearning platform in improving knowledge and competence of CHWs

Location: Matayos & Teso South Busia

Pilot Period: January 2025- August 2025

Reporting to: Director, Program Strategy & Excellence, and dotted line to Director, Evidence & Insights

1.0 Background

The eLearning study in Busia County, Kenya, was initiated to determine the extent to which the capacity of Community Health Promoters (CHPs) can be enhanced through a digital learning platform on their mobile phones. The initiative forms part of a broader effort to modernize and strengthen community health service delivery by leveraging cost efficient, affordable easily scalable approaches/platforms like digital technology for training, performance tracking, and knowledge retention. CHPs play a critical role in maternal and child health service provision, including antenatal care (ANC), postnatal care (PNC), and disease prevention such as pneumonia and diarrhea management. However, traditional in-person training models are very expensive, unsustainable and thus have shown limitations in consistency, accessibility, and cost-effectiveness. This has prompted the need to explore alternative, scalable, and sustainable training modalities that mitigate logistical barriers and ensure equitable access continuous capacity building.

The study is guided by the Monitoring, Evaluation, and Learning (MEL) Framework, which outlines six key pathways: enhanced learning and retention, improved knowledge translation, increased accessibility and cost-effectiveness, strengthened capacity of CHWs, strengthened feedback loops, and scalable and sustainable digital learning solutions. Using a Theory of Change (ToC) and Diffusion of Innovation (DoI) approach, the study seeks to understand how different groups of Community Health Promoters (CHPs) engage with the digital platform and what factors influence their adoption and sustained use. By mapping the expected outcomes and underlying assumptions, the ToC framework will guide the analysis of how key inputs such as digital training, supportive supervision, and platform usability contribute to improved CHP performance and service delivery. Additionally, the study will explore how to optimize training delivery through the Learning Management System (LMS) to improve accessibility, cost-effectiveness, knowledge retention, and service quality across diverse user groups. The evaluation will measure progress against clear indicators such as exam pass rates, course completion, ANC/PNC coverage improvements, user satisfaction, and cost-efficiency. Ultimately, the goal is to determine whether the eLearning model can serve as a viable alternative or complement to in-person training, and how it can be optimized for national scale-up within Kenya’s digital health ecosystem.

2.0 Purpose and Scope of Work

2.1 Purpose of the evaluation

The purpose of this evaluation is to assess the effectiveness, relevance, cost effectiveness and scalability of the eLearning platform introduced for Community Health Promoters (CHPs) in Busia County, Kenya. Specifically, the evaluation will assess:

- The extent to which the platform has improved learning and knowledge retention, skill application, and service delivery outcomes.

- The alignment of the platform’s content, usability, interface and delivery with the learning needs of CHPs, supervisors, and communities they serve.

- Factors influencing adoption and usage of the platform, including the applicability of the Theory of Change (ToC) and Diffusion of Innovation (DoI). The comparative value of digital learning to traditional in-person methods.

- The platform’s cost-effectiveness, operational feasibility, and potential for scale, and integration within the MoH system.

2.2 Scope of Work

2.2.1 Targeted Population

The evaluation focuses on:

- Community Health Promoters (CHPs) Government workers): 205 CHPs (100%) from Matayos Sub- County and 60 CHPs from Teso South Sub- County.

- Supervisors/ Peer Coaches and Trainers (Living Goods Staff): Key actors involved in planning, delivering, and monitoring digital learning content.

- Community Health Assistants (CHAs) (Government staff): Support personnel who assist CHPs with field-based learning and technical navigation of the LMS.

- Matayos Sub- County (Learning Site): CHPs with higher digital literacy and prior LMS experience.

- Teso South Sub- County (Implementation Site): CHPs with lower digital literacy and limited prior LMS experience.

Covers the entire pilot phase of the eLearning initiative up to January 2025- August 2025, as per project framework indicators. The evaluation study should run September 2025 for 21 days, subject to alignment based on the consultant workplan.

2.2.4 Methodology

The evaluation will adopt a mixed-methods approach to comprehensively assess the performance, impact, and scalability of the eLearning platform for Community Health Promoters (CHPs) in Busia County. This approach ensures triangulation of findings by combining quantitative data analysis with qualitative insights, enabling a robust understanding of program outcomes across different sub-counties and user groups.

Quantitative Methods

To measure effectiveness, efficiency, and impact, structured pre- and post-training assessments will be analyzed using descriptive and inferential statistics. These will include:

- Exam pass rates: Proportion of CHPs achieving ≥80% score in module quizzes and final assessments.

- Course completion rates: LMS logs will track module access, time spent, and completion status.

- Service delivery indicators: ANC attendance (first trimester), PNC coverage within 24 hours, pneumonia diagnosis accuracy, and referrals for malnutrition will be extracted from health registers and compared before and after training.

- Usage analytics: Metrics such as login frequency, module revisit rates, quiz attempts, and average time per module will be extracted from the LMS backend.

- Cost-efficiency: Cost per CHP trained via eLearning will be compared against historical costs of in-person training using MoH expenditure records.

- Data will be analyzed using SPSS, Stata, or R, applying statistical tests (e.g., t-tests, chi-square) to detect significant changes over time and between early and late adopters.

To understand adoption patterns, user experience, barriers/facilitators, and sustainability potential, the following methods will be employed:

- Key Informant Interviews (KIIs): Conducted with supervisors, CHAs, and CHPs representing innovators, early adopters, and laggards.

- Focus Group Discussions (FGDs): Separate sessions with male and female CHPs in Matayos and Teso South to explore contextual challenges and peer dynamics.

- Thematic coding: Qualitative responses will be transcribed, coded using NVivo, and thematically analyzed based on DoI constructs (relative advantage, compatibility, complexity, trialability, observability).

- User feedback surveys: Structured surveys capturing satisfaction levels, perceived usability, and recommendations for improvement.

Each evaluation criterion will be linked to specific metrics:

- Effectiveness: Measured through improvements in knowledge scores (pre/post), service delivery consistency (ANC/PNC), and diagnostic accuracy.

- Impact: Assessed through community-level health indicators (e.g., increased first-trimester ANC, improved referral rates).

- Sustainability: Evaluated via the cost-effectiveness analysis (comparing in-person and e-learning factoring in ongoing technical support systems, and LMS revisit behavior.)

- Adoption: Classified using LMS usage patterns mapped against DoI categories and validated through interviews and self-reporting.

- Equity: Analyzed through subgroup comparisons between Matayos and Teso South, focusing on digital literacy, device access, and learning outcomes.

- And any additional evaluation metrics.

The Consultant will lead all aspects of the evaluation including:

- Designing and finalizing data collection tools (KII guides, FGD protocols, survey questionnaires).

- Conducting fieldwork, managing data quality, and ensuring ethical compliance.

- Performing data analysis and interpretation and drafting reports in line with OECD-DAC standards.

- Presenting findings and recommendations to stakeholders and revising based on LG feedback.

LG will provide:

- Technical and logistical support during field visits.

- Access to LMS usage data, baseline and midline results, and existing documentation.

- Coordination with county health officials and CHP supervisors for participant mobilization.

- Review and feedback on draft and final reports to ensure alignment with program goals and implementation realities.

3.1 Overall objective

The purpose of this evaluation is to assess the effectiveness, relevance, cost effectiveness and scalability of the eLearning platform Community Health Promoters (CHPs) in Busia County, Kenya.

3.2 Specific Objectives

- To determine whether the eLearning initiative is appropriate, contextually relevant, and responsive to the needs of CHPs, supervisors, and the communities they serve.

- To evaluate the extent to which the eLearning platform has achieved its stated learning and service delivery outcomes as defined in the MEL Framework.

- To understand how efficiently resources were used to implement the eLearning program compared to traditional in-person training.

- To assess the broader effects of the eLearning program on CHP capacity, service delivery consistency, and community-level health indicators.

- To determine whether the eLearning platform can be sustained beyond pilot funding and integrated into existing systems.

- To analyze how different adoption behaviors (innovators, early adopters, laggards) influence the effectiveness of the eLearning platform.

- To compare the impact of eLearning with that of traditional in-person training on CHP performance and health outcomes.

- To ensure that the eLearning platform is inclusive and addresses disparities in access and learning outcomes.

- To capture insights gained during implementation and inform recommendations for future scale-up and adaptation.

- To provide actionable recommendations for optimizing the eLearning platform and supporting sustainable scale-up.

Result 1: Understanding Adoption Patterns Based on diffusion of innovation theory Categories

Purpose:

- Can To identify which CHP's fall into each adoption category and explore factors influencing their progression from one group to another

- Can CHPs be clearly categorized into innovators, early adopters, early majority, late majority, and laggards based on LMS usage patterns?

- What percentage of CHPs in each sub-county (e.g., Matayos vs. Teso South) fall into each adoption category?

- What characteristics (e.g., age, digital literacy, motivation, access to resources) distinguish early adopters from late adopters?

- How did early adopters influence or support late adopters in navigating the LMS?

- Did any laggards eventually transition to later adopter groups? If so, what interventions facilitated this shift?

Purpose

- To Assess how CHPs perceive the eLearning Platform in terms of its relative advantage, compatibility, complexity, trialability and observability

A. Relative Advantage

- To what extent do CHPs perceive eLearning as more advantageous than in-person training in terms of time, cost, flexibility, and accessibility?

- How does perceived advantage differ between early and late adopters?

- Has the use of eLearning reduced travel time and costs compared to traditional training?

- How compatible is the LMS with the daily routines and responsibilities of CHPs?

- Does the content align with the health protocols and tools they use in the field (e.g., eCHIS, Malaria RDTs)?

- Are there cultural or contextual mismatches that affect how different CHPs relate to the platform?

- How easy or difficult do CHPs find it to navigate the LMS?

- What specific features or functions are perceived as complex or confusing?

- Did digital literacy modules help reduce perceived complexity among late adopters?

- How often do CHPs try out the LMS before fully committing to using it regularly?

- Is offline functionality sufficient to allow CHPs to test the platform without internet connectivity?

- Were CHPs able to complete at least one full module before deciding whether to continue?

- Are improvements in knowledge or performance visible to CHPs, supervisors, or community members after using the LMS?

- Did supervisors observe better ANC/PNC service delivery among CHPs who completed eLearning modules?

- Can CHPs demonstrate improved diagnostic skills (e.g., pneumonia breath counting) due to LMS learning?

Purpose:.

- Identify Systemic and personal barriers affecting adoption, based on baseline analysis challenges

- What were the top three reasons for low engagement or incomplete module completion?

- Did data costs significantly hinder engagement, especially among CHPs in Teso South?

- How did device limitations (RAM, screen size, battery life) affect user experience and learning outcomes?

- Were technical fault (e.g., app freezing, video playback issues) common across all users?

- Did peer mentorship models help bridge gaps between early and late adopters?

Purpose:

- Measure how the adoption of the LMS translated into changes in knowledge, behavior, and service delivery.

- Was there a minimum 15% improvement in pre-post test scores for supervisors and CHPs who used the LMS?

- Did CHPs who used the LMS consistently perform better in field assessments (e.g., ANC/PNC visits, pneumonia diagnosis)?

- Is there a correlation between frequency of LMS use and performance metrics (e.g., ANC attendance rates, PNC coverage)?

- Did CHPs refer back to LMS modules during home visits or community education sessions?

- Were there measurable increases in the number of women attending first-trimester ANC or children diagnosed with pneumonia?

Purpose:

- Understand how the change process (adoption of eLearning via DoI theory) affects actual health outcomes delivered by CHPs.

A. Maternal Health Indicators

- Did CHPs trained via eLearning improve ANC attendance rates in their communities?

- Did the number of women attending ANC in the first trimester increase after the introduction of the LMS?

- Was there an increase in women completing ≥4 FANC visits following eLearning roll-out?

- Did PNC coverage within 24 hours improve, particularly in areas where CHPs reported lower baseline confidence?

- Did CHPs show increased accuracy in diagnosing diarrhea cases after completing relevant modules?

- Did knowledge gaps between Matayos and Teso South narrow following targeted digital literacy and refresher sessions?

- Were CHPs able to apply nutrition education consistently in the community?

- Was there an increase in referrals or follow-ups related to malnutrition due to improved CHP capacity?

- Were there fewer disparities in knowledge application between sub-counties (e.g., Matayos vs. Teso South)?

- Did the eLearning platform help bridge knowledge gaps in critical areas such as pneumonia diagnosis, ANC/PNC touchpoints, and diarrhea management?

- Did CHPs in remote areas become more consistent in recognizing danger signs and making appropriate referrals?

Purpose:

- Analyze how different adoption behaviors influence the effectiveness of CHPs in delivering health services.

- Did early adopters achieve better health outcome indicators (e.g., ANC attendance, pneumonia diagnosis) compared to late adopters?

- How did peer mentorship models improve health service delivery among late adopters?

- Did gamification features (leaderboards, badges) motivate CHPs to retake modules or improve quiz scores, leading to better health outcomes?

- Were CHPs who accessed the LMS more frequently associated with better health data reporting and follow-up?

Purpose:

- Capture insights gained during implementation and evaluate how adaptations improved adoption and learning outcomes.

- What were the five key lessons learned in conducting eLearning for CHPs and supervisors?

- Which adaptations (e.g., lighter app size, bulk download, resume feature) proved most effective in improving adoption and health outcomes?

- How were challenges related to offline functionality, device compatibility, and technical support addressed?

- Were user feedback loops used effectively to inform iterative improvements to the LMS?

Result 8: Comparative Evaluation: eLearning vs. In-Person Training (Impact on Health Outcomes)

| Indicator | In-Person Baseline (Reported in Baseline Survey) | Comparison Outcome (eLearning platform) |

| Exam Pass Rate | Varies by cohort | Higher? Lower? Equal? |

| Course Completion | ~85% attendance-based | More flexible? Motivating? |

| Cost per CHP | High (travel, meals, venue) | Cheaper? More scalable? |

| User Satisfaction | High interpersonal value | Preferred modality? |

| Refresher Engagement | Quarterly review meetings | More frequent? Effective? |

| Skill Application | Field observation | Better retention? Faster recall? |

Using evaluation criteria based on the internationally recognized standards of relevance, effectiveness, efficiency, impact, sustainability and scalability elements.

Relevance: Is the eLearning initiative appropriate and aligned with stakeholders’ (in this case the CHP) needs?

Purpose:

- Assess whether the eLearning platform addresses the actual training needs of CHPs and aligns with national health priorities, digital strategies, and service delivery goals.

- To what extent does the eLearning content reflect the real-world responsibilities and challenges faced by CHPs in Busia County?

- Does the curriculum align with the Ministry of Health (MoH) protocols and community health worker competencies?

- Was the eLearning platform designed with input from CHPs, supervisors, and implementers to ensure contextual appropriateness?

- How well does the LMS complement other digital tools used by CHPs (e.g., eCHIS, WhatsApp)?

- Is the platform relevant to both early adopters and late adopters, considering differences in digital literacy and age?

- Does the eLearning model support equity in access to training across sub-counties (e.g., Matayos vs. Teso South)?

Purpose:

- Determine whether the eLearning platform successfully met its stated outcomes related to knowledge gain, skill development, and service delivery improvements.

- Did the percentage of CHPs passing final exams reach the target of 90%?

- Were CHPs able to apply the knowledge gained through eLearning to improve maternal and child health services (e.g., ANC/PNC visits, pneumonia diagnosis)?

- Did at least 80% of enrolled CHPs complete each course, as per MEL framework targets?

- Were supervisors able to observe improved competency in the field among CHPs trained via eLearning?

- Did the number of women attending first-trimester ANC increase after the rollout of eLearning?

- Did the LMS contribute to a measurable improvement in confidence levels, particularly among lower-performing CHPs in Teso South?

Purpose:

- Evaluate how well resources were utilized to deliver the eLearning program compared to traditional in-person training methods.

- Was the cost per CHP trained reduced by 15% compared to traditional in-person training?

- What were the major cost drivers in implementing and maintaining the LMS?

- How many CHPs were trained using the LMS versus in-person sessions within the same time frame?

- Was the offline functionality sufficient to allow learning without incurring data costs?

- How much time did CHPs spend on self-paced learning compared to time lost in physical training?

- Did the LMS reduce logistical expenses such as travel, venue rental, and meals for trainers and participants?

Purpose:

- Measure the broader effects of the eLearning program on CHP capacity, health service delivery, and community health outcomes.

- Has eLearning contributed to increased numbers of women attending ANC in the first trimester (target: 1,500)?

- Has the proportion of pregnant women completing ≥4 FANC visits reached the target of 75%?

- Did the number of children diagnosed with pneumonia reach 2,000, and was accuracy maintained?

- Have CHPs reported greater confidence in identifying danger signs in under-five children after using the LMS?

- Has the use of the LMS led to more consistent application of diagnostic protocols across sub-counties?

- Are supervisors observing better ANC/PNC follow-up behaviors among LMS-trained CHPs?

- Has the LMS improved CHPs' ability to refer to cases appropriately and maintain continuity of care?

Purpose:

- Assess whether the eLearning platform can be sustained beyond pilot funding and integrated into existing systems.

- Is the LMS being integrated into regular supervisory practices and performance tracking systems?

- Are there plans to institutionalize the LMS within MoH frameworks or partner agency workflows?

- Has the LMS been whitelisted like eCHIS to reduce ongoing data costs for CHPs?

- Are CHAs being trained as LMS navigators and troubleshooters to support long-term use?

- Is the technical infrastructure scalable to accommodate future expansion to new regions?

- Are there mechanisms in place for regular content updates, bug fixes, and user feedback integration?

- Are CHPs revisiting the LMS regularly for reference materials, indicating long-term utility?

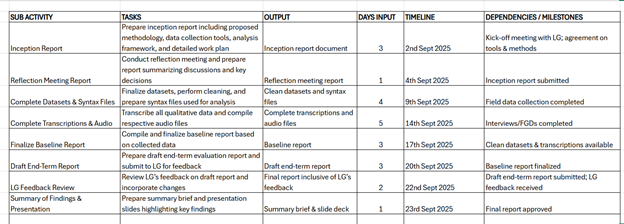

The expected results of the exercise are (refer to the table below):

- Inception report: Including the proposed methodology, data collection tools, analysis framework, and a detailed work plan.-

- Reflection meeting report

- Complete datasets and syntax files used for analysis.

- Complete transcriptions of qualitative data and respective audio files.

- Finalize baseline report

- Draft end-term report: Will be presented to LG for input and feedback. LG will give feedback within seven days of receipt of the draft report.

- Final report inclusive of LG’s feedback.

- Summary of findings and presentation.

6.0 Timeline

The assignment is projected for 20 working days.

7.0 Qualifications of the Consultant. (Basis of Evaluation Criteria)

- Education: Master’s degree in public health, M&E, Social Sciences, or related field

- Experience:

- At least 5 years evaluating health programs, especially in maternal and child health

- Experience with digital health tools and LMS platforms

- Technical Skills:

- Proficient in Nvivo, SPSS, Stata, R, Excel

- Familiarity with mobile data tools (ODK, Kobo Toolbox)

- Knowledge:

- Understanding of OECD-DAC criteria, WHO digital health guidelines, and DoI theory

- Fieldwork Experience:

- Experience conducting both quantitative and qualitative methodology in a study

- Reporting Skills:

- Strong ability to produce reports, dashboards, and presentations

Commercial evaluation (Maximum score 30%)

Offer Validity

In accordance with the guidelines set forth in this RFP, the proposal for support services should remain valid for a minimum period of three (3) months starting from the final date of proposal submission. Living Goods will make every effort to conclude negotiations within this period. If Living Goods deems it necessary to extend the validity period of the proposals, the Bidder will be required to agree to such an extension.

Important Due Dates

| Date/ Timeline | Description |

| 1st September 2025 | Last date of receiving questions and clarification request on RFP scope and terms. |

| 4th September 2025 | Responses from LG |

| 9th September 2025 | Proposal submission deadline 11PM EAT |

| Week of 15th September | Evaluation & Presentations |

| Week of 15th September | Notifications of intent to award, negotiations & Contract award |

| Week of 22nd September | Project Kick-off |

Notification of Intent

Upon successful negotiation, Living Goods will issue a 'Notification of Intent' to the most competitive bidder(s). It is important to note that this Notification of Intent does not constitute the formation of a contract between Living Goods and the [partner organization] unless certain conditions are met. These conditions may include the results of the standard due diligence procedures to be carried out by Living Goods, formal acceptance of the proposal, commitment of necessary resources, and submission of a performance guarantee, among others.The sole purpose of this Notification of Intent is to express Living Goods' willingness to proceed with the acquisition of the service, subject to the execution of a valid Contract and/or the issuance of a signed purchase order.

Acceptance or Rejection

Living Goods, at its sole discretion, retains the right to accept or reject all proposals. The issuance of this request for proposal does not impose any obligation on Living Goods to take any action concerning any response submitted by a Bidder in response to this request.Living Goods reserves the right to cancel or annul the RFP and the bidding process at its sole discretion and this will be binding to all the participating bidders.

Anti-Corruption Clause

The bidder must not offer, give, or make any illegal or corrupt payments, gifts, considerations, or benefits, directly or indirectly, as inducement or reward for the award or execution of this contract. Any such practice will lead to the contract's termination or other corrective actions as needed. A breach of this clause will be deemed a material breach of the agreement, enabling Living Goods to terminate the contract immediately.Proposal submission

All submitted proposals must strictly adhere to the guidelines and requirements outlined in the RFP. Once a bid proposal has been submitted, it is considered final and cannot be modified or amended. Therefore, it is crucial that you carefully review and comply with all RFP instructions before submitting your proposal.

Upon completion of your proposal, it is necessary to affix the signature of either yourself or a duly authorized representative of your company. This signature serves as an acknowledgement that you have thoroughly read the RFP and have prepared the proposal in accordance with the guidelines provided as well as the undertaking that the submitted proposal is a no-deviation submission as per the scope and requirements outlined in the RFP.

Proposal Format and Content

All proposals must include both technical and financial components submitted separately with the subject “End Term Evaluation for eLearning Study in Busia, Kenya”.:

Ensure attachments of the below

- eLearning Concept note

- eLearning MEL result framework

- Baseline analysis

All RFP responses, including the final RFP must be submitted in soft copy (both Technical and Financial) to procurementglobal@livinggoods.org no later than 9th September 2025.